Is AI Eating Itself

Each and every day, more and more, AI models are learning from data generated by other AIs instead of humans. Picture an artist who spends years painting landscapes, then suddenly begins painting pictures of their own paintings. Each new work copies the last, maybe with a few new colors or details. At first, it’s interesting and even clever. But over time, the art loses its sharpness. The texture disappears. The mountains and skies all blend together. The artist ends up repeating their own style, painting only faint versions of what came before.

That’s similar to what’s happening online today. AI models are starting to learn from data made by other AIs. If you’ve ever reheated leftovers more than once, you know it doesn’t end well.

In short, AI is beginning to consume its own work.

The more this continues, the more the digital world loses its depth and becomes unfamiliar.

The loop begins Most large language models learn by digesting massive amounts of human text. Everything from Wikipedia articles and research papers to Reddit threads and forgotten blog posts. They read the internet, compress the patterns, and predict what word should come next in a sentence. That’s how they build language “intuition.”

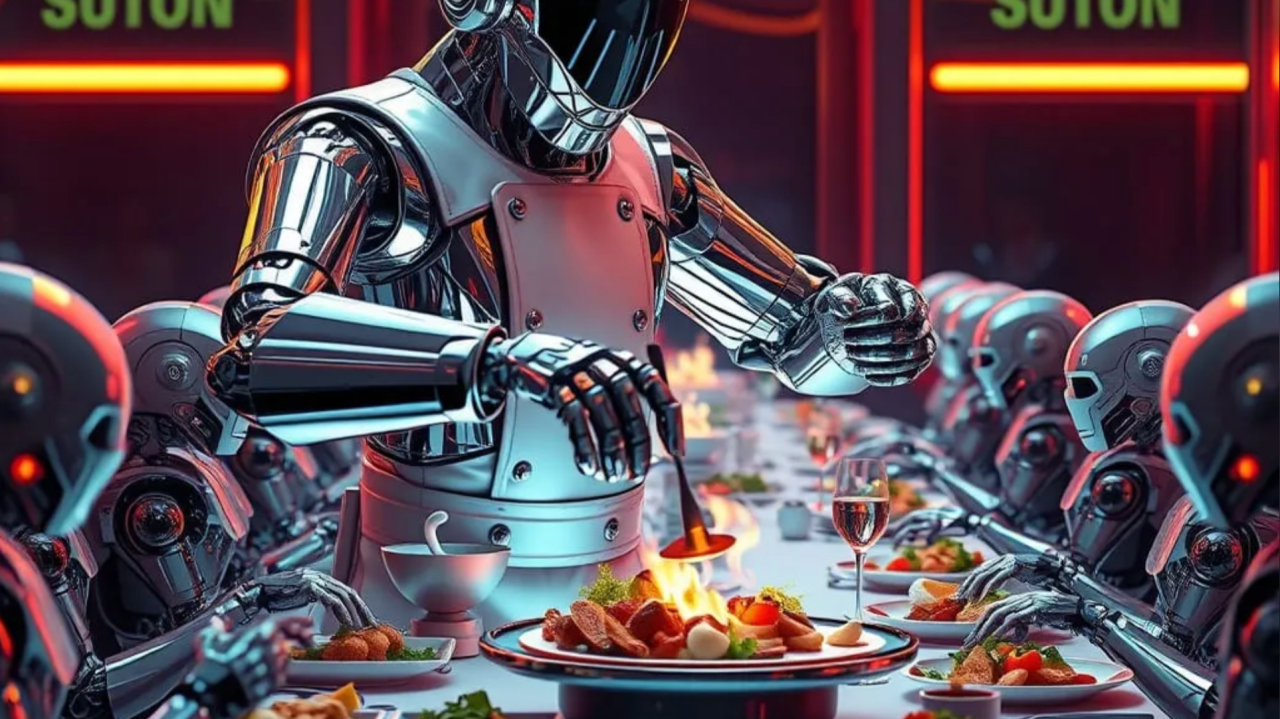

But here’s the twist. Every time someone publishes an AI-written blog post, product description, or review, that text becomes part of the internet. It joins the training pool. And the next generation of models might use it as data. Which means we’re starting to feed machine-generated text back into the system. It’s like teaching chefs by having them taste food made by robots, which learned from old recipes. At first, it works. But after a while, everyone forgets what real spices are supposed to taste like.

The internet was once messy, unpredictable, and human. Now, it’s becoming more polished by machines. As AI fills the web with automated writing, it ends up learning from its own output.

When an AI model learns, it doesn’t understand like people do. It isn’t thinking; it’s averaging. It looks at millions of examples of how words fit together and builds a map of likely patterns. When those examples come from other AIs, it’s like inbreeding in language. Patterns repeat, mistakes become habits, style shrinks, and variety disappears.

In research terms, this is called model collapse. It’s what happens when a generative model’s outputs become its future inputs. Over time, the signal decays into noise. The system becomes overconfident in wrong answers and loses its ability to surprise.

It’s like making a copy of a copy. Each time, you lose some detail. In the end, you’re left with nothing but a gray blur.

We’ve already seen early signs of this. A 2023 study from Oxford and Cambridge found that repeated AI-on-AI training caused language models to degrade after just a few rounds. They forgot rare words. They got worse at math. Their reasoning flattened. The models became dull, predictable, almost robotic. Which, ironically, is what they were supposed to avoid.

At first, this might sound like a technical issue for data scientists. But it’s more important than that. Our digital world now relies on content made by AI. Search engines use AI to summarize pages. Marketing teams use it for campaigns. Even journalists use AI to draft articles. When the original material is artificial, everything built on top of it changes.

Imagine a library where half the books were secretly written by a machine that read the other half. You might still learn things, but your sense of truth would wobble. There’s no fresh input, no grounding in reality. Just remix upon remix.

That’s where we’re heading unless we change how these systems feed.

Here’s the troubling part. The only groups still adding large amounts of new data online are those with something to sell or promote. Advertisers, political groups, and influence campaigns are the most active, and often the most manipulative, content creators.

This means that as the web becomes more synthetic, the remaining human input will skew heavily toward persuasion and control.

So as AI consumes its own output, it’s also mixing in propaganda.

The poisoned buffet Every major language model acts like a sponge on the internet, absorbing everything it finds. If most of what’s out there is ads, fake news, and influencer content, that’s what the model learns to imitate.

We’re already seeing signs of this problem. Models start to repeat brand slogans, use political language, and copy stereotypes, not on purpose, but because those patterns are everywhere in their training data.

Now, consider the next step: AI-generated propaganda. Bots write fake news stories. Fake online identities spread planned messages. Deepfake videos generate text descriptions, which then serve as additional training data. The difference between real and artificial content fades until it’s impossible to tell them apart.

It’s like an ecosystem where only invasive species survive. As they spread, there’s less space for diversity.

This is the internet’s version of ecological collapse. Except instead of forests and rivers, it’s our information commons that’s getting overrun.

Philosopher Jean Baudrillard warned that when copies seem more real than reality, we enter a world of ‘simulacra.’ This means a world full of mirrors, where signs point only to other signs, not to real things.

That’s where generative AI is steering us.

When you ask a model to explain something now, it may seem like you’re getting a summary of human knowledge. But more often, you’re reading a remix of other remixes, a map made from other maps. As each new generation learns from the last, the system drifts further from the real world it was supposed to reflect.

Weather models stop predicting storms well because their data is artificial. Translation models invent phrases that never existed. Chatbots make up laws that sound real but aren’t. This isn’t intentional, it’s just the result of the data repeating itself.

We’ve created a system that only reflects itself, over and over.

How we got here The incentive structure is simple. AI content is cheap. It scales fast. It fills websites, blogs, and feeds without needing sleep or salaries. So companies flood the internet with it. SEO farms pump out machine-written posts. Publishers quietly use AI to summarize their archives. Even small creators use it to keep up with the feed’s pace.

So now we have billions of new pages being generated every month. Most of them are never read by humans. But they’re still data. They still count.

When the next major AI model scans the web, it will find those pages and treat them as real as any original writing. The model can’t tell if an essay was written by a person or by a machine. Both look like proper text, follow grammar, and make sense.

But meaning is not the same as correct grammar. That’s the sad part… We’re teaching machines to speak fluently about a world that’s slowly losing its own authenticity.

Can we interrupt this process?

Not entirely. But we can’t stop it completely, but we can slow it down. We can create filters that detect AI-generated content before it enters the training pool. Others propose using “data passports,” where each dataset records its provenance. Some want to build dedicated human-only repositories, a kind of cultural seed bank for future AI.

It’s like saving something precious before everything becomes uniform.

But the real solution might be social, not technical. We need to appreciate original human content again, reward it, and make it easy to find. Right now, the internet only cares about what ranks highest, not who wrote it. That’s like judging music only by how loud it is.

If we want AIs that think clearly, we need to feed them clarity. And that comes from us.

Let’s be honest: the next few years will be strange. We already live in a world where AI writes news, scripts, and code. Soon, it will comment on its own work, review its own reviews, and even quote itself, quoting itself.

Imagine a chatbot citing a blog post written by another chatbot, which is based on a tweet from a bot trained on summaries of chatbots. It’s a strange loop, almost like Black Mirror creating its own episodes.

And what about humans in this cycle? We become editors, curators, and the ones who check for sense. We’re the last defense against a loop of artificial content.

That’s both empowering and terrifying.

Because if we tune out, if we let the machines talk to each other unchecked, they’ll drift. Not into sentience, but into incoherence.

My quiet theory I think we’re seeing something new. A second internet is forming under the first. The human web and the machine web overlap, but they aren’t the same. The machine web doesn’t care about truth; it cares about patterns. It repeats itself and is shaped by algorithms that reward what’s familiar.

Over time, these two webs will grow apart. One will be noisy, full of human mess, creativity, and bias. The other will be smooth, polished, and always the same. We’ll have to choose which one we trust.

That’s the real danger of AI repeating itself. It doesn’t get too smart, it gets too certain, too uniform, and too confident in its own artificial answers. The content singularity.

When everything starts to sound the same, even the truth becomes dull.

So, is AI eating itself? Yes. And it’s getting indigestion.

The more synthetic content floods the web, the more the next generation of models will choke on it. The flavors blur. The creativity dulls. The richness of human language thins out like overused paint water.

It doesn’t have to end here. It should not end here!

The future of AI, and the culture it consumes, is a choice. By deciding what goes in, we shape whether machines mirror our creativity or drown in sameness. The next move is ours. Let’s make it count.

Every sentence we write and every story we share is a seed in the data garden. The next generation of models will grow from what we give them.

If we want AIs that think clearly, we need to keep writing like humans. Not just typing words, but saying something.

That’s the meal worth serving.